DRBSD-9

In cooperation with IEEE Computer Society and ACM

Held in conjunction with SC23: The International Conference for High Performance Computing, Networking, Storage and Analysis

Program

Time: 2pm - 5:30pm MST; Location: 507

Link to SC23 workshop page

| 2:00 - 2:05 | Opening Remarks and Welcome |

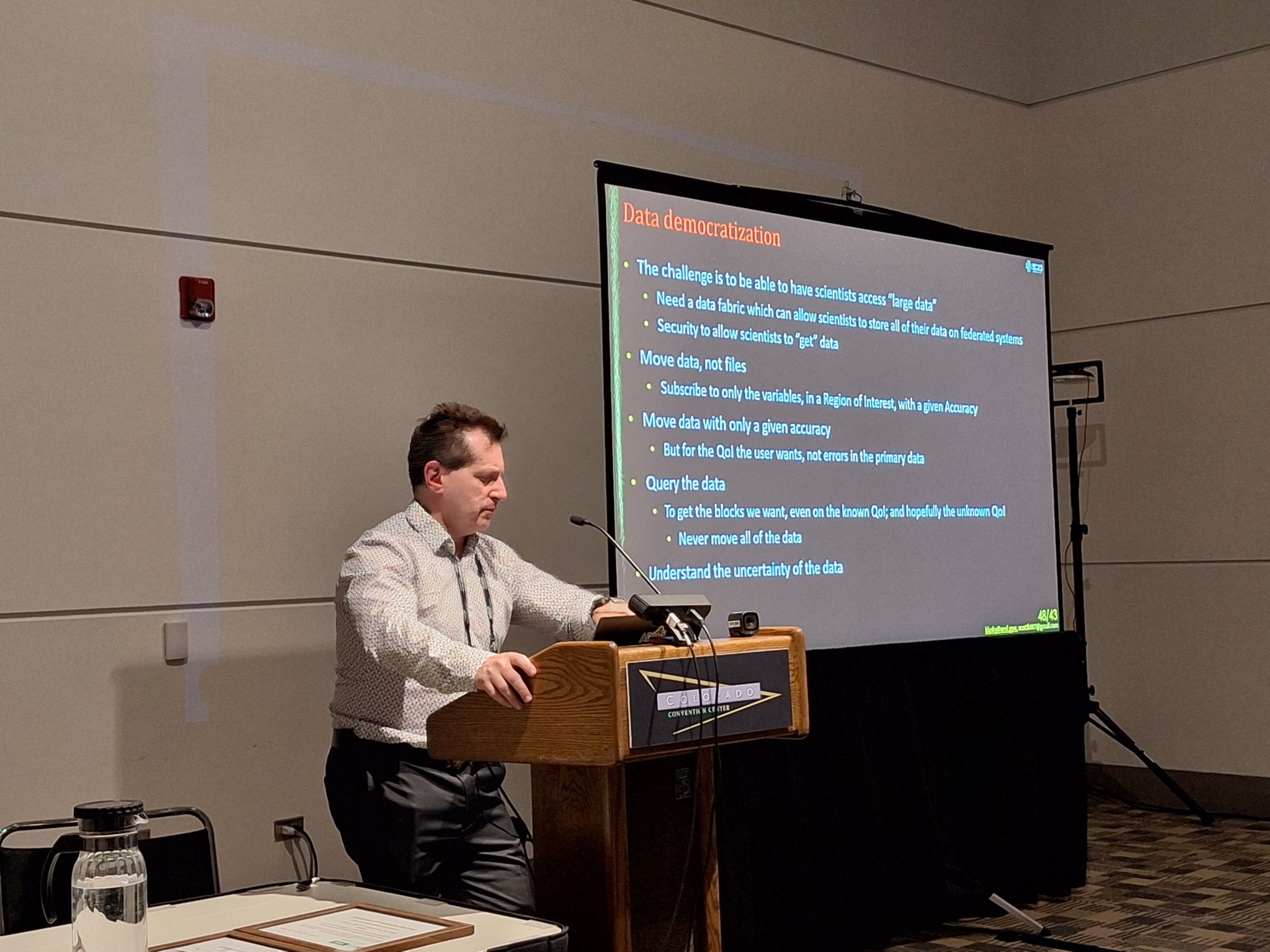

| 2:05 - 2:50 | Invited Talk: Scientific Data Democratization: Enabling Efficient Access and Analysis of Large-Scale Scientific Data Scott Klasky, ORNL |

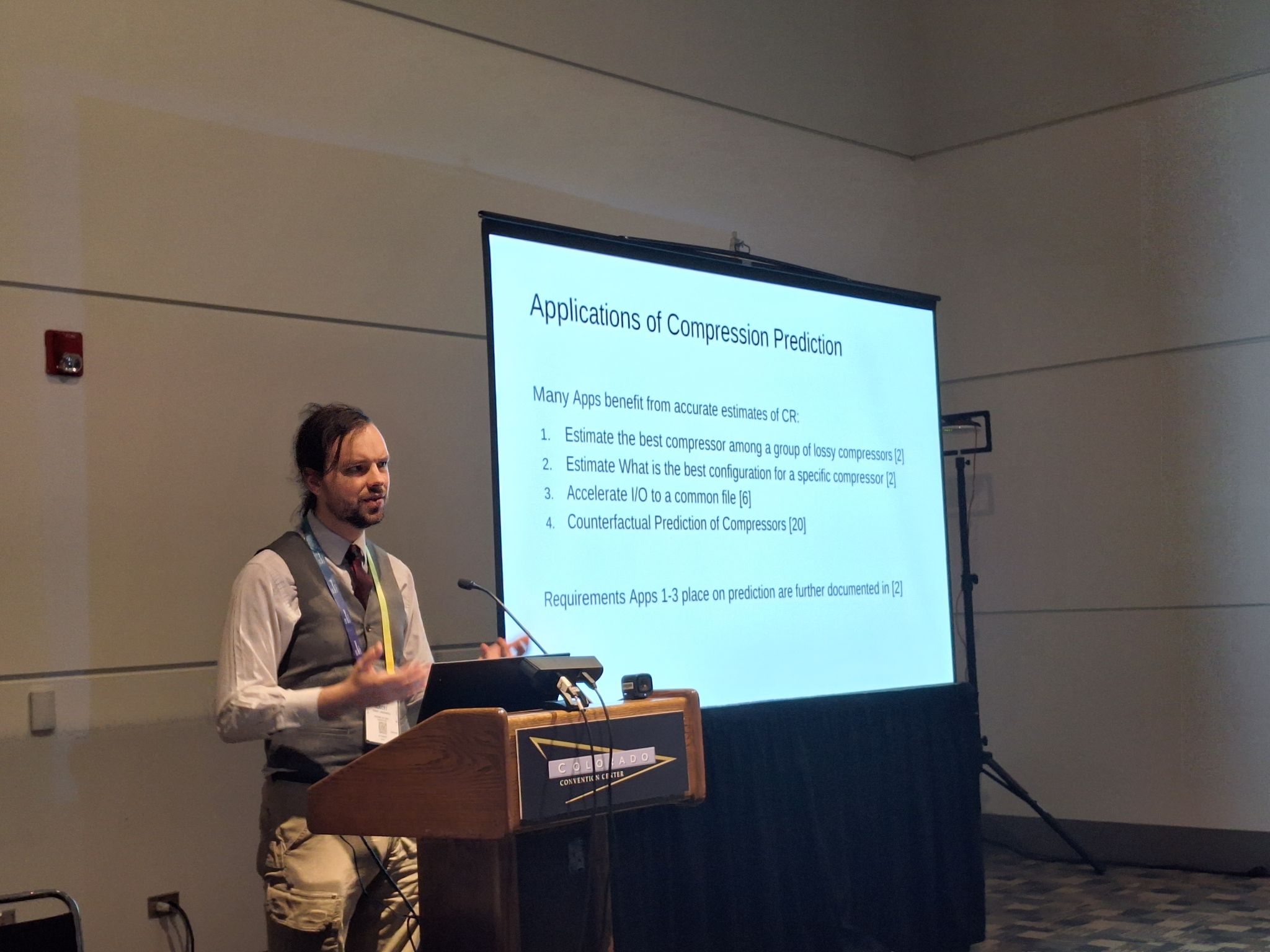

| 2:50 - 3:15 | LibPressio-Predict: Flexible and Fast Infrastructure for Inferring Compression Performance Robert R. Underwood, Sheng Di, Sian Jin, Md Hasanur Rahman, Arham Khan, Franck Cappello |

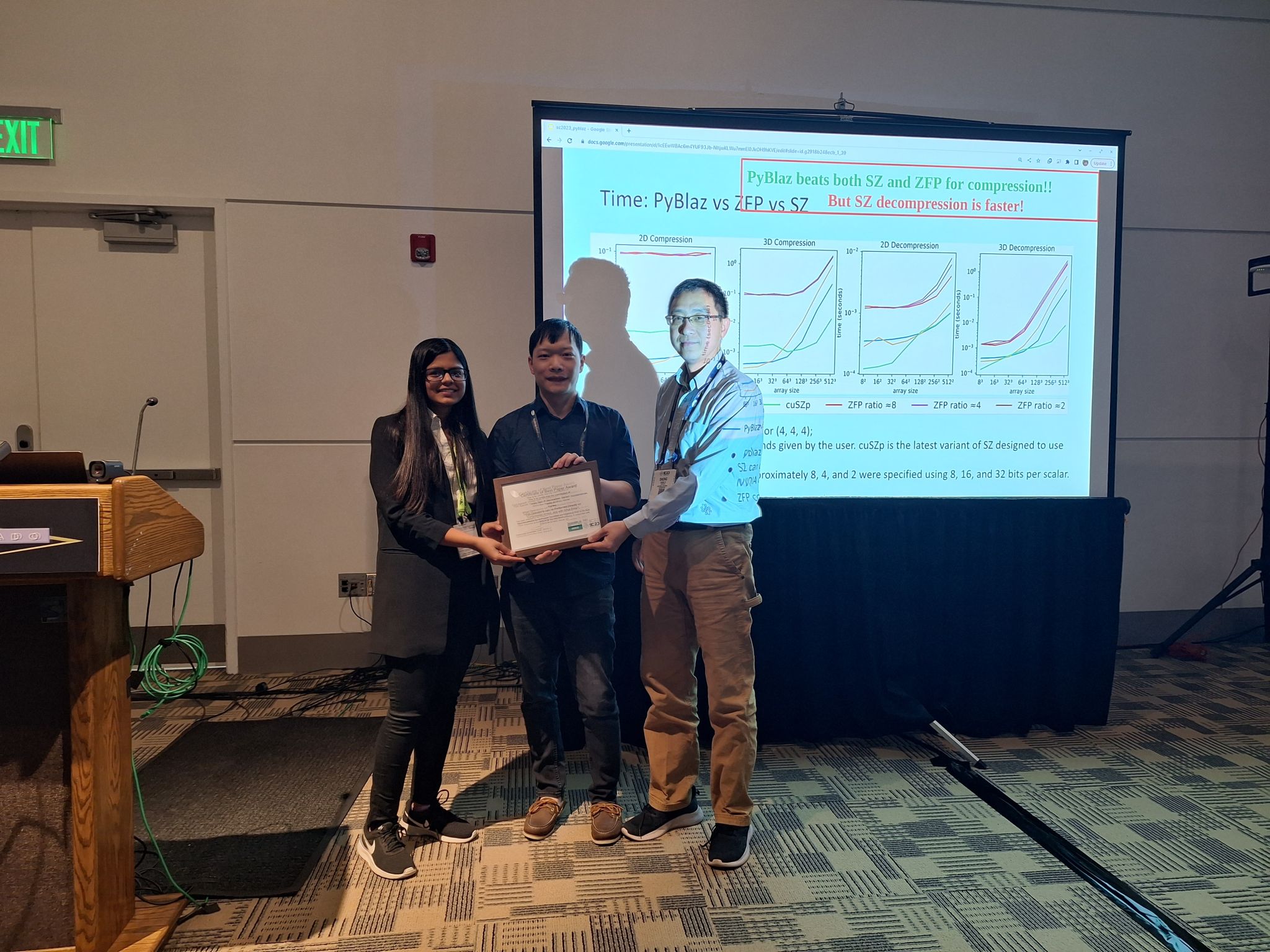

| 3:15 - 3:40 | What Operations can be Performed Directly on Compressed Arrays, and with What Error? Tripti Agarwal, Harvey Dam, Ganesh Gopalakrishnan, Dorra Ben Khalifa, Matthieu Martel Best Paper Award |

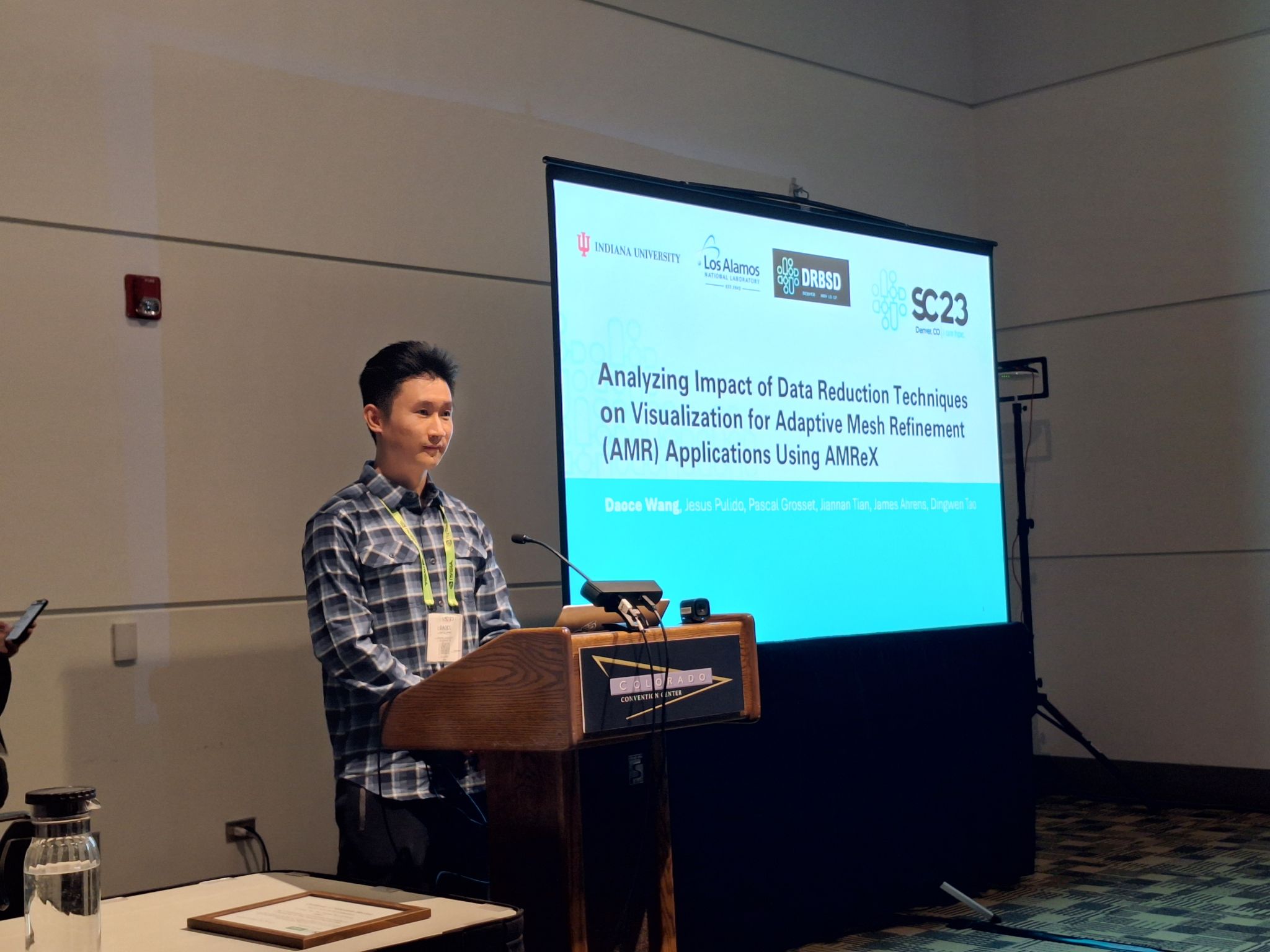

| 3:40 - 4:05 | Analyzing Impact of Data Reduction Techniques on Visualization for AMR Applications Using AMReX Framework Daoce Wang, Jesus Pulido, Pascal Grosset, Jiannan Tian, James Ahrens, Dingwen Tao |

| 4:05 - 4:30 | Fast 2D Bicephalous Convolutional Autoencoder for compressing 3D Time Projection Chamber Data Yi Huang, Yihui Ren, Shinjae Yoo, Jin Huang Best Paper Finalist |

| 4:30 - 4:55 | Streaming Hardware Compressor Generator Framework Kazutomo Yoshii, Tomohiro Ueno, Kentaro Sano, Antonino Miceli, Franck Cappello |

| 4:55 - 5:20 | Lossy and Lossless Compression for BioFilm Optical Coherence Tomography (OCT) Max Faykus III, Jon Calhoun, Melissa Smith |

| 5:20 - 5:30 | Closing Remarks |

Topics

A growing disparity between simulation speeds and I/O rates makes it increasingly infeasible for high-performance applications to save all results for offline analysis. By 2024, computers are expected to compute at 1018 ops/sec but write to disk only at 1012 bytes/sec: a compute-to-output ratio 200 times worse than on the first petascale system. In this new world, applications must increasingly perform online data analysis and reduction—tasks that introduce algorithmic, implementation, and programming model challenges that are unfamiliar to many scientists and that have major implications for the design and use of various elements of exascale systems.

This trend has spurred interest in high-performance online data analysis and reduction methods, motivated by a desire to conserve I/O bandwidth, storage, and/or power; increase accuracy of data analysis results; and/or make optimal use of parallel platforms, among other factors. This requires our community to understand the clear yet complex relationships between application design, data analysis and reduction methods, programming models, system software, hardware, and other elements of a next-generation High Performance Computer, particularly given constraints such as applicability, fidelity, performance portability, and power efficiency.

There are at least three important topics that our community is striving to answer: (1) whether several orders of magnitude of data reduction is possible for exascale sciences; (2) understanding the performance and accuracy trade-off of data reduction; and (3) solutions to effectively reduce data while preserving the information hidden in large scientific data. Tackling these challenges requires expertise from computer science, mathematics, and application domains to study the problem holistically, and develop solutions and hardened software tools that can be used by production applications.

The goal of this workshop is to provide a focused venue for researchers in all aspects of data reduction and analysis to present their research results, exchange ideas, identify new research directions, and foster new collaborations within the community.

Topics of interest include but are not limited to:

• Data reduction methods for scientific data

° Data deduplication methods

° Motif-specific methods (structured and unstructured meshes, particles, tensors, ...)

° Methods with accuracy guarantees

° Feature/QoI-preserving reduction

° Optimal design of data reduction methods

° Compressed sensing and singular value decomposition

• Metrics to measure reduction quality and provide feedback

• Data analysis and visualization techniques that take advantage of the reduced data

° AI/ML methods

° Surrogate/reduced-order models

° Feature extraction

° Visualization techniques

° Artifact removal during reconstruction

° Methods that take advantage of the reduced data

• Data analysis and reduction co-design

° Methods for using accelerators

° Accuracy and performance trade-offs on current and emerging hardware

° New programming models for managing reduced data

° Runtime systems for data reduction

• Large-scale code coupling and workflows

• Experience of applying data reduction and analysis in practical applications or use-cases

° State of the practice

° Application use-cases which can drive the community to develop MiniApps

Submission

Important Dates

Full Paper submission deadline: August 26, 2023 September 5, 2023 (AoE)

Author notification: September 13, 2023

Camera-ready final papers submission deadline: September 29, 2023 (AoE)

Remote presentation videos (Optional) submission deadline: September 30, 2023 (AoE)

Submissions

• Papers should be submitted electronically on SC Submission Website.

https://submissions.supercomputing.org

• Paper submission should be in single-blind ACM format.

https://www.acm.org/publications/proceedings-template

• DRBSD-9 will accept full papers (limited to 8 pages excluding references/appendix and 10 pages including references/appendix) and extended abstracts (2 pages including references).

• Submitted papers will be evaluated by at least 3 reviewers based upon technical merits. The accepted papers will be published with IEEE/ACM.

• DRBSD-9 encourages submissions to provide artifact description and evaluation but not mandatory. Details: https://sc23.supercomputing.org/program/papers/reproducibility-initiative/

• DRBSD-9 will select papers for Best Paper Award and Best Paper Runner-up Award.

Committee Members

Organizing Committee

Jieyang Chen, Oak Ridge National Laboratory

Ana Gainaru, Oak Ridge National Laboratory

Xin Liang, University of Kentucky

Todd Munson, Argonne National Laboratory

Sheng Di, Argonne National Laboratory

Program Chair

Dingwen Tao, Indiana University

Steering Committee

Ian Foster, Argonne National Laboratory/University of Chicago

Scott Klasky, Oak Ridge National Laboratory

Qing Liu, New Jersey Institute of Technology

Technical Program Committee

Allison Baker, National Center for Atmospheric Research (NCAR)

Dan Huang, Sun Yat-sen University

Dorit M. Hammerling, Colorado School of Mine

Frank Cappello, Argonne National Laboratory

Ian Foster, Argonne National Laboratory/University of Chicago

Jakob Luettgau, University of Tennessee

Jieyang Chen, Oak Ridge National Laboratory

Kerstin Kleese van Dam, Brookhaven National Laboratory

John Wu, Lawrence Berkeley National Laboratory

Martin Burtscher, Texas State University

Michela Taufer, University of Tennessee

Peter Lindstrom, Lawrence Livermore National Laboratory

Scott Klasky, Oak Ridge National Laboratory

Sheng Di, Argonne National Laboratory

Todd Munson, Argonne National Laboratory

Wen Xia, Harbin Institute of Technology, China

Xin Liang, Missouri University of Science and Technology

Xubin He, Temple University